Choosing the Right LLM: A Practical Cost-Benefit Analysis

It seems impossible to have a conversation regarding future proofing a business these days without Generative AI and LLMs being brought into the discussion. Enterprises embarking on this exciting, yet daunting, LLM integration journey are often faced with the decision to select the right model best suited for their use case. This article delves into crucial factors that influence your selection strategy, offering critical insights along the way to aid in making well-informed decisions.

Generative AI, broadly speaking, are a group of algorithms and models that have the capability of generating new content that resembles, and in many cases is indistinguishable from, human-created content. This content can be images, music, videos, and of course, text.

Large Language Models (LLMs) are a specific type of generative AI model that are particularly focused on understanding and generating human language. These models have a wide range of applications, including chatbots, language translation, text generation, summarization, and more.

Examples of LLMs range from proprietary models, also referred to as paid models, such as OpenAI’s GPT, Google’s Bard, and Google Gemini, to open-source models, such as Meta’s LLaMa2, Falcon, Mistral, OPT, Mosaic, and Vicuna.

These models are a powerful advancement in Natural Language Processing (NLP) and are trained on vast amounts of text data, allowing them to generate coherent and contextually relevant text-based responses when prompted.

Let’s go through each key consideration – Performance, Model Dimensions, Usage Rights, and Cost – breaking down the advantages and limitations of Open-Source and Proprietary Models in the process.

Delving into Performance: Speed and Precision

Beyond generating human-like text, inference speed and precision are significant factors that affect how well an LLM can deliver a response. Inference speed refers to the time it takes for a model to receive an input and generate an output. It is particularly important for user-facing applications like virtual assistants and real-time translation services.

Paid models are often optimized for performance, with dedicated infrastructure to support rapid response times. This can offer a significant advantage for your organization if delivering a streamlined user experience is essential. For instance – OpenAI GPT uses dedicated Microsoft Azure AI Supercomputing infrastructure and is optimized for LLMs.

Conversely, because open-source models can be hosted on various infrastructures, from on-site servers to cloud-based services (such as AWS SageMaker and Bedrock, Microsoft AzureAI, and Google VertexAI), the inference speed of these models can vary widely. Ultimately, the performance speed will highly depend on the computational resources your organization has available and the expertise of the team in charge of optimizing the setup.

Key Takeaway

While some open-source models can rival paid models in performance speed, achieving this often requires significant investment in hardware and optimization.

Finding the Right Model Dimensions: Balancing Context Length and Model Size

When it comes to an LLMs effectiveness in processing and generating text, there are two fundamental components that impact the model’s performance – context length and model size.

Key Takeaway

If your organization will be utilizing an LLM for a dialogue system, or an application where maintaining the thread of a conversation is essential, finding an LLM with a large context length and model size is especially important.

Context Length

Context length refers to the maximum number of tokens that the LLM can input when generating responses. In real world applications, the context length can quickly grow with the number of characters in a prompt.

For example, a 1-2 sentence prompt uses about 30 tokens, while a paragraph with 1,500 words uses about 2,048 tokens. LLMs with larger context length can generate coherent and contextually relevant text over longer interactions. GPT-3.5 and GPT-4, for example, come with 4k, 8k, and 16k context length.

Similarly, open-source models have been designed with different context length capacities, and these are often influenced by the architecture of the model and the computational resources available for LLM development, training, and deployment.

Model Size

The size of an LLM, typically measured by the number of parameters it contains, is another important contributor to performance. Like context length, the larger the model size, the greater the capacity for understanding and generating text. Larger paid models like OpenAI GPT-3 boast an impressive 175 billion parameters.

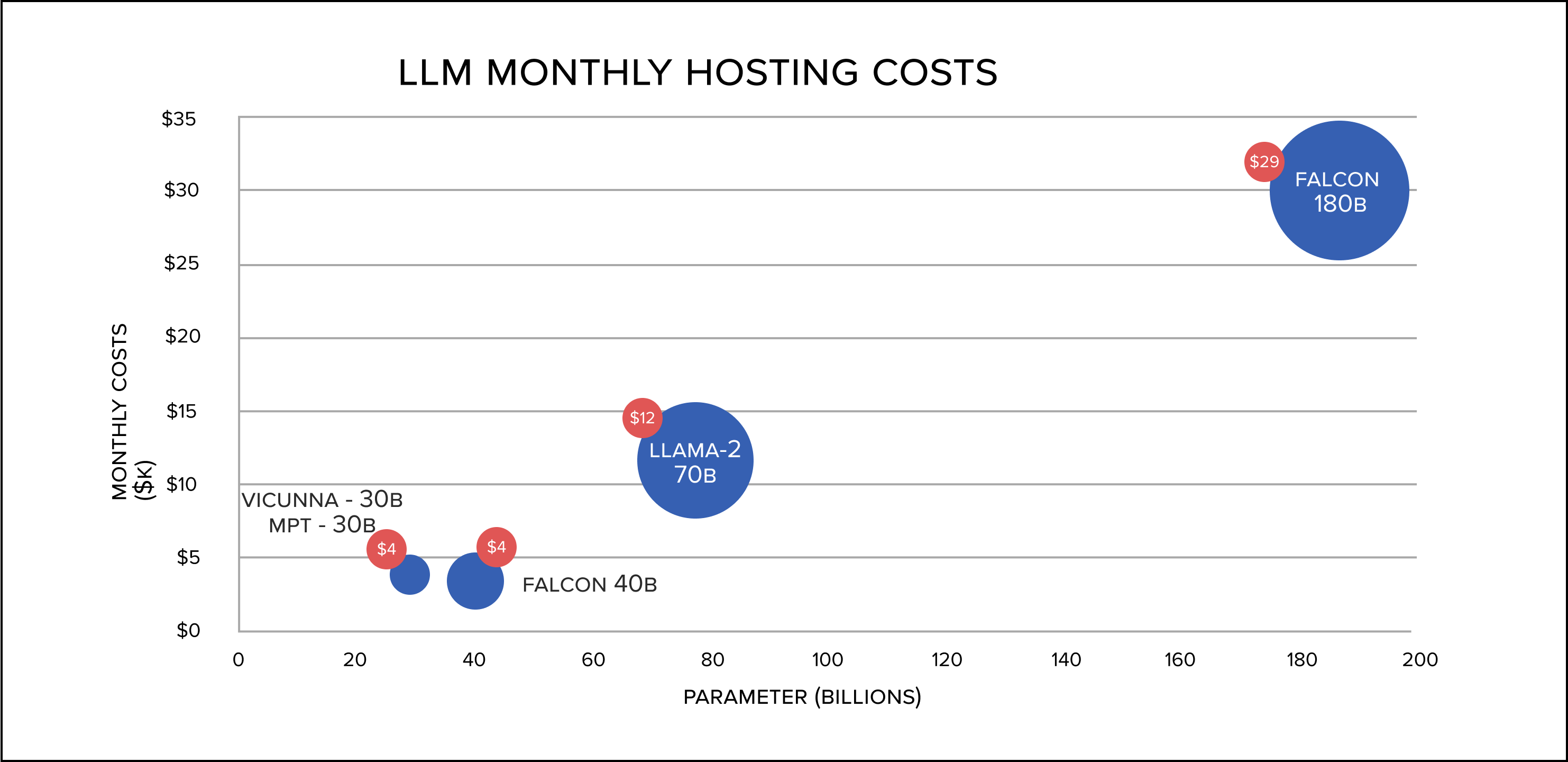

Open-source models offer a range of sizes (Falcon: 180B, LLaMa2: 70B, Falcon: 30B, MPT: 30B, and Vicunna: 30B parameters) giving organizations the flexibility to choose a model that balances performance with infrastructure constraints.

Navigating Usage Rights

When it comes to selecting a model for LLM integration, understanding the licensing terms and usage rights of paid and open-source models is just as important as assessing the technical capabilities of the model itself.

OpenAI’s GPT models are proprietary, therefore, their usage is governed by OpenAI’s licensing agreements. Typically, these models are accessed via APIs and will build their pricing structure based on usage volume, including token limits and number of API calls.

Open-source models are typically available under licenses that allow for more flexibility in how they can be used and modified, allowing you to host the model on your own infrastructure.

Now that we’ve established influencing factors, let’s go through the specific elements that play into cost.

Key Takeaway

Understanding privacy, security, and performance requirements for your intended use cases is critical in modeling usage and selecting your LLM.

Conclusion

Weighing Your Words: A Cost-Benefit Breakdown

The decision between paid and open-source LLMs deserves a thorough cost-benefit analysis. Paid models often prioritize performance, leveraging optimized infrastructure for rapid response times. While open-source models can match this, they require the right setup and careful resource management.

•

Implications and Hidden Expenses

As previously mentioned, paid models typically operate on a subscription or usage-based pricing model, with costs tied to the number of tokens processed. While this can offer predictability and ease of budgeting, the costs can accumulate quickly.

To demonstrate, let’s look at three hypothetical GPT-4 input scenarios and their associated costs:

As you can see, the total cost can increase rapidly, especially for prompts with longer inputs.

Open-source models, while free to access, come with their own set of costs. These include the infrastructure needed to run the models and potential expenses related to customization, maintenance, and updates.

The graph below shows the relative cost of hosting an open-source LLM on AWS’ computing infrastructure:

Key Takeaway

If you find that a free open-source LLM will be best suited for your business, you must ensure that your organization has the timeline, resources, infrastructure, and team to not only successfully execute the LLM integration but sustain the model as well.

•

The Cost of Data Privacy

With paid models processing data on provider servers, there can be privacy concerns. Providers typically offer strong security measures, but the risk of data breaches remains. Note that paid models may not comply with different compliances, like HIPAA, GDPR, and CCPA.

Open-source models can offer greater data privacy and security through self-hosted servers, but this approach requires a robust internal infrastructure, which can be costly and complex to manage.

Key Takeaway

Think about your organization's privacy requirements. If your organization demands uncompromisable data security and budget is not a concern for your LLM development, an open-source model may be best. However, depending on your infrastructure constraints and the use case of the LLM, there could be a paid model that fulfills your privacy requirements while meeting your performance expectations.

•

Considering Scalability: Understanding Your User Interactions

As the user base load increases, whether through an increase in interactions or an increase in users, the capabilities and limitations of both model types become more significant.

Because paid models are hosted on scalable cloud infrastructures, they allow for seamless scalability, accounting for fluctuations in demand. Even though this scalability comes at a cost, it can be crucial for businesses experiencing rapid growth or those with variable usage patterns.

Open-source models offer the flexibility to be deployed on a variety of infrastructures, from on-premises servers to private or public cloud services. This allows your organization to tailor the application setup to meet the specific demands of your user base and interaction patterns. However, with this model, you would be responsible for ensuring that the infrastructure can handle increased loads, which can involve significant investment in hardware and technical expertise.

Key Takeaway

Consider the timeline and nature of your business. If your organization anticipates rapid growth or fluctuations in demand, a paid model will provide more scalability and adaptability throughout your LLM integration process.

•

Token Usage: Designing Applications with Utilization in Mind

Token usage is a major cost driver for paid models since pricing is often based on the number of tokens generated or processed. If your organization has heavy usage patterns, you must carefully manage token consumption to control costs. For instance, GPT also has rate limits by way of tokens per minute (TPM) and requests per minute (RPM) to ensure fair usage and manage server load. GPT-4 has TPM of 80,000 and RPM of 5,000. Note that tokens are used during the input of prompts and the output of responses, easily exhausting TPM and RPM. Exceeding these limits can lead to additional costs or the throttling of services, which can be disruptive for certain applications.

Open-source models do not have token usage fees, which can be a significant advantage for applications with high token turnover.

Key Takeaway

Understanding your application and its usage patterns is paramount during LLM development. If you expect your LLM application to experience high traffic or unpredictable usage, an open-source model can offer more consistency in cost, but you may sacrifice other advantages like scalability. It's imperative to consider the broader spectrum of ownership costs in evaluating this potential saving.

Making Your Selection

Choosing the ideal Large Language Model (LLM) for your business is a critical task, but it doesn’t have to be an overwhelming one. With careful consideration of the aspects covered in this article, you will be well-equipped to make the strategic LLM development choices that will bring value to your enterprise.

At 10Pearls, we stand ready to help you achieve these goals. Our team of AI experts and seasoned technical professionals can provide the support and insights needed to harness the full potential of LLMs. Whether you're looking to optimize existing solutions or explore new AI-driven opportunities, 10Pearls can offer the expertise and comprehensive technical assistance to guarantee your success.