Emotional Responses to AI Systems: A Preview of our Research

More and more businesses are leveraging artificial intelligence systems and chatbots to enhance customer experience, but many questions remain about their long-term value. The most pressing among them: how should we measure the success of AI systems? how can we gauge end users’ true responses to it? and how can we create compelling and effective user experiences when there is no visual user interface? A better understanding of AI could help answer these questions and simplify UX for AI, which involves cognitive design, i.e. designing for how people think (not just what they want to see).

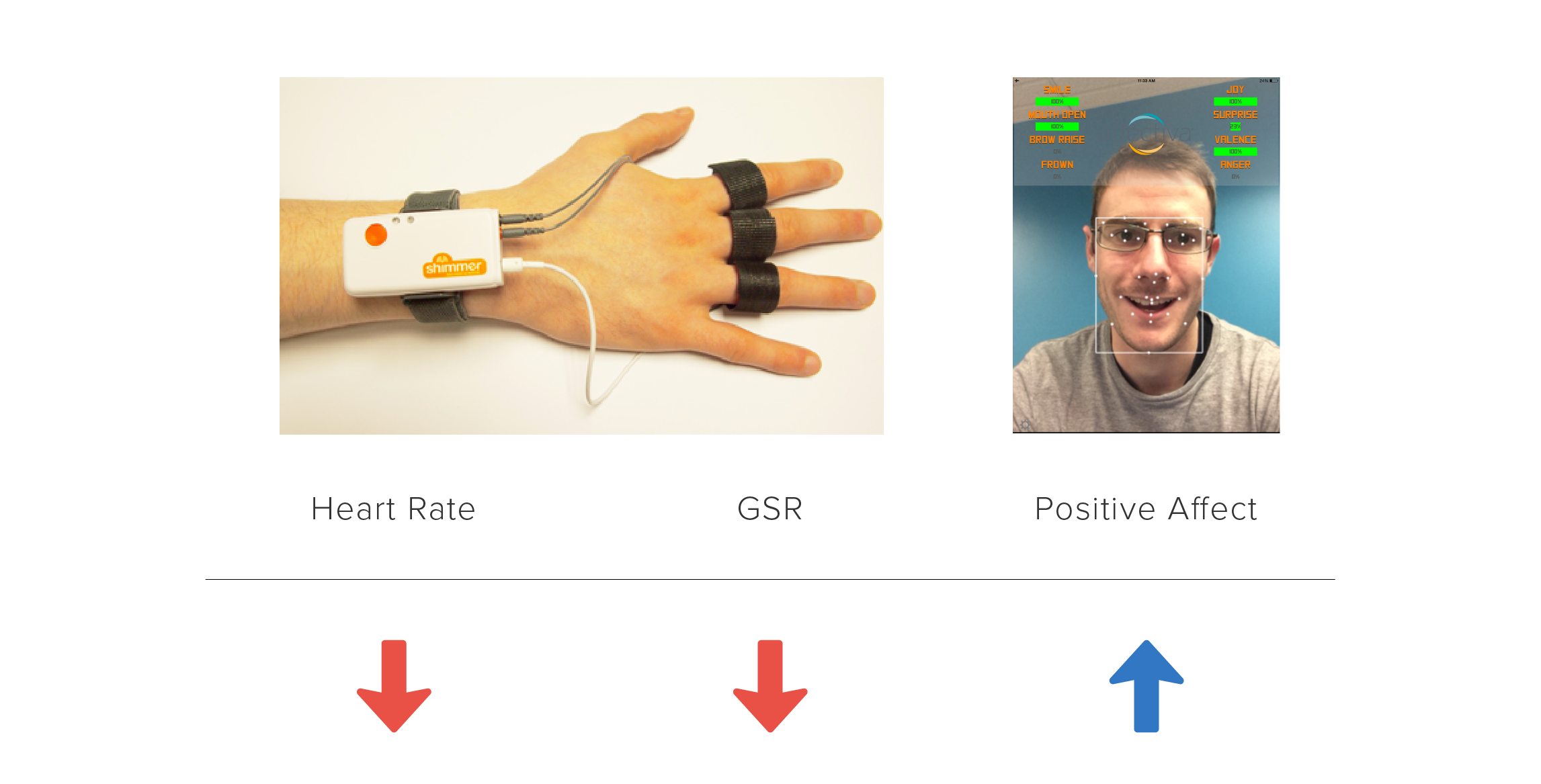

To get some answers, our customer experience team, led by John Whalen, researched how humans structure their commands to the most popular AI systems (Siri, Cortana, Alexa, and Google Assistant) and measured human reactions to AI responses using biometrics (facial emotion recognition, heart rate, GSR).

During the study, we asked a diverse group of users (young and old, native and non-native English speakers, techies and non-techies) to interact with the systems to:

- Request simple facts (e.g. the second tallest building in Chicago) and complex information (Microsoft’s last closing stock price)

- Retrieve travel and calendar information (e.g. flight status and traffic conditions)

- Ask subjective or tricky questions (e.g. “Siri, do you like me?” or “Alexa, do Aliens exist?”)

- Make commands (e.g. “Send a text to Max.”)

There were several intriguing findings.

First, we found that testers’ favorite AI systems were not always the ones that gave the most accurate answers. Instead, testers judged AI systems based on emotion and accuracy.

We also found a distinct physiological signature associated with a positive experience (heart rate was down, GSR was down, and positive facial expression was present).

The results show that if businesses want to create chatbots and AI systems that are successful in the market, they need to include physiological and emotional response as part of their success metrics. They must also focus on humanizing user experiences and creating a UX that reflects how users truly think and speak, keeping in mind conversation cadence, differences in command styles, and context relevance.

John discussed the results of the study at an O’Reilly’s AI Conference in San Francisco last week.